How to Filter Duplicate Content from 2T Dictionary Large Text

2025-03-17 12:01:01

In the world of password cracking or data processing, having a 2T text-type dictionary file is a powerful resource. However, such a large file is often prone to a large amount of duplicate data, which not only takes up unnecessary storage space, but also may affect the efficiency of subsequent operations based on this dictionary file, such as the speed of password lookup. So, effectively filtering out duplicate content is a crucial step.

1. Understand the source and impact of duplicate data

First, we need to understand why there is so much duplicate data. During the construction of a dictionary file, data may be collected from multiple data sources that inherently partially overlap. For example, when collecting data from different word lists, common password sets, lists of various character combinations, etc., some basic words or simple password combinations may be present in multiple sources.

This duplication of data can have a number of negative effects. From a storage point of view, 2T is already a huge space, and if there is a lot of duplicate content in it, it is equivalent to wasting valuable storage space. When actually using this dictionary file for password cracking or other operations, duplicate content can lead to unnecessary lookup and comparison operations. For example, if the algorithm needs to compare the content in the dictionary with the target password one by one, the duplicate content will increase the number of comparisons, thus slowing down the entire cracking process.

2. Filtering method based on text processing tools

Use the tools under Windows

- Use PowerShell

- On Windows, PowerShell provides rich text processing capabilities. We can use the following PowerShell script to remove duplicate lines:

```powershell

$lines = Get - Content "dictionary.txt"

$uniqueLines = @()

foreach ($line in $lines) {

if ($uniqueLines - notcontains $line) {

$uniqueLines += $line

}

}

$uniqueLines | Set - Content "unique_dictionary.txt"

```

This script first reads all the lines in the "dictionary.txt" into an array "$lines". Then, iterate through each row through a loop, and if a row is not in the new array "$uniqueLines", add it to the new array. Finally, save the contents of the new array to the "unique_dictionary.txt".

Divide and conquer algorithm

- Since our dictionary file is very large (2T), direct processing may run into issues such as running out of memory. The divide and conquer algorithm can solve this problem very well. We can divide this large file into several smaller sub-files. For example, we can divide it by a certain number of lines or file size.

- Then, duplicate filtering is applied to each sub-file individually. Re-merge the processed sub-files into a single file. During the merge process, you also need to double-check for duplicate content, as there may be the same content between different subfiles.

4. Verify the filtering results

After repeated filtering, we need to verify that the results are correct. There are simple methods that can be used, such as randomly sampling a few lines and checking the number of occurrences of those lines in the original and filtered files. If it appears more than once in the original file and only once in the filtered file, the filtering is valid.

In addition, it is possible to compare the size of the original file and the filtered file. If the filtered file size is significantly smaller than the original file, and it behaves correctly in subsequent tests, such as a simple password lookup test using this dictionary file to see if it works properly and no passwords are missing, it can also indicate that the repeated filtering efforts have worked well.

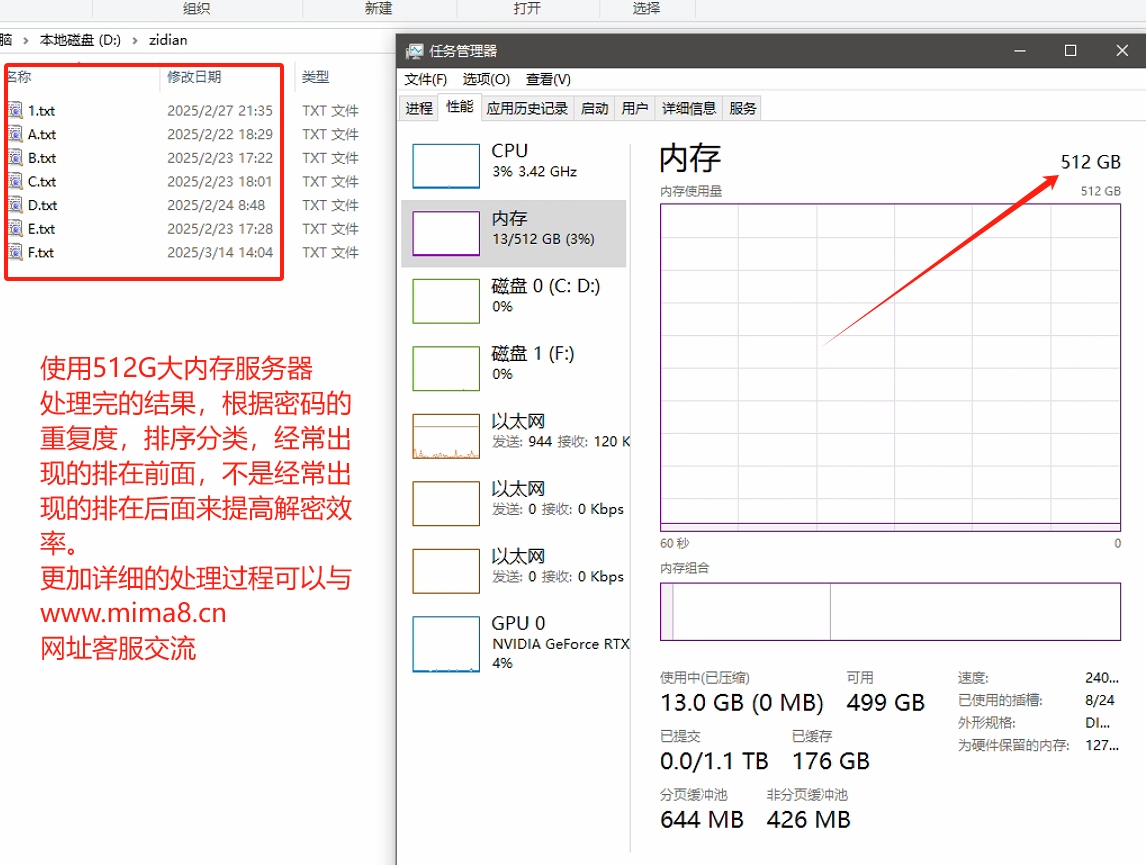

Our server uses 512G memory, high-speed NVMe protocol hard disk server, it took half a month to successfully complete the processing, and found out a set of efficient processing scripts, if there is a type of demand, you can contact the website customer service to communicate, filter the pits that have been stepped on in the repeated process, and automatically process the writing of scripts!

Filtering out duplicate content in 2T's text-type dictionary files is a challenging but very necessary job. Through the reasonable selection of tools and algorithms, we can effectively remove duplicate content, improve the quality and efficiency of dictionary files, and have important significance in password cracking and other application scenarios based on this dictionary file.